Teamfight Tactics (TFT) is an auto-battler game in the League of Legends universe. Success depends on luck, strategy, and keeping an eye on what your opponents are doing. I wonder if I can use the supreme power of TensorFlow to help me do the last bit?

The problem

Given a screenful of information, can I use object detection & classification to give me an automated overview?

What I’ve learned about machine learning

I usually write these posts as I’m going, so the experience is captured and the narrative flows (hopefully) nicely. But with this bit of ‘fun’ I’ve learned two hard facts

- Python, and it’s ecosystem of hacked together packages, is an unforgiving nightmare. The process goes like this: a) install package, b) run snippet of code, c) try to debug code, d) find nothing on google, keep trying, e) eventually find reference to bug reported in package version x f) install different version [return to b]. It’s quite common to find that things stopped being supported, or that ‘no-one uses anything after 1.2.1, because it’s too buggy’. It’s also quite common to only make progress via gossip and rumour.

- The whole open source ML world is still very close to research. It’s no wonder Google is pushing pre-packaged solutions via GCP, getting something to work on your own PC is hard work, and the steps involved are necessarily complicated (this *is* brain surgery.. kinda)

But I’ve also found that I, a mere mortal, can get a basic object detection model working on my home PC!

The Approach

This is very much a v0.1 – I want to eventually do some fancy things, but first I want to get something basic to work, end to end.

Here are the steps:

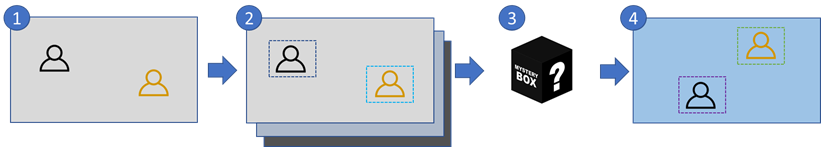

- My problem – I have an image containing some (different) characters which I want to train the computer to be able to recognise in future images

- I need to get a lot of different images, and annotate them with the characters

- I feed those images and the annotations into a magic box

- The magic box can then (try to) recognise those same characters in new images

One of the first things I’ve realised is that this is actually two different problems. First, identify a thing, then try to recognise the thing

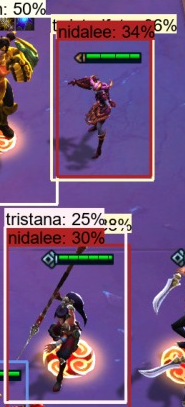

In this early run, which I did mainly to test the pipework, the algorithm detects that the 2 characters in the foreground are present, but incorrectly labels one of them. In it’s defence, it’s not confident in EITHER.

So, to be clear, it’s not enough that I can teach it to recognise ‘character-like’ things, it also has to be able to tell them apart.

Step 1: Getting Tensorflow installed

I’ve had my python whinge, so this is all business. I tried 3 approaches:

- Install a docker image

- Run on a (free) google colab cloud machine

- Install it locally

In the end I went with 3. I don’t really understand docker enough to work out if it’s broken, or I’m not doing it right. And I like the idea of running it locally so I understand more about the moving parts.

I followed several deeply flawed guides, before finding this one (Installation — TensorFlow 2 Object Detection API tutorial documentation) which was only a bit broken towards the end. What I liked about this was how clear each step was, and upto a certain stage, everything just worked.

Step 2: Getting some training data

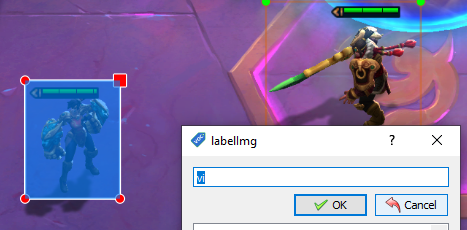

I need to gather enough images to feed into the model to train it, which I’m doing by taking screenshots in game, and annotating them with a tool called labelImg

I’m not sure how many images I need, but I’m going to assume it’s a lot. There are 59 characters in the game at the moment, and each of those has 3 different ‘levels’. There are also other things I’d like to spot too, but for now, I’ll stick with the characters.

I’ve got about 90 images, taken from a number of different games. The characters can move around, and there are different backgrounds depending on the player, so there should be a reasonable amount of information available. There can also be the same character multiple times on each screenshot.

I started out labelling the characters as name – level (e.g. garen – 2), which is ultimately where I want to be, but increasing the number of things I want to spot, while keeping the training data fixed means some characters have few (or no) observations.

Step 3: Running the model

I’m not going to describe the process in too much detail, I’ve heavily borrowed from the guide linked earlier, the rough outline of steps is as follows:

- Partition the data into train & test (I’ve chosen to put 25% of my images aside for testing)

- Build some important files for the process – one is just a list of all the things I want to classify, the other (I think) contains data about the boxes I’ve drawn around the images.

- Train the model – this seems to take AGES, even when run on the GPU.. more about this later

- Export the model

- Import the model and run it against some images

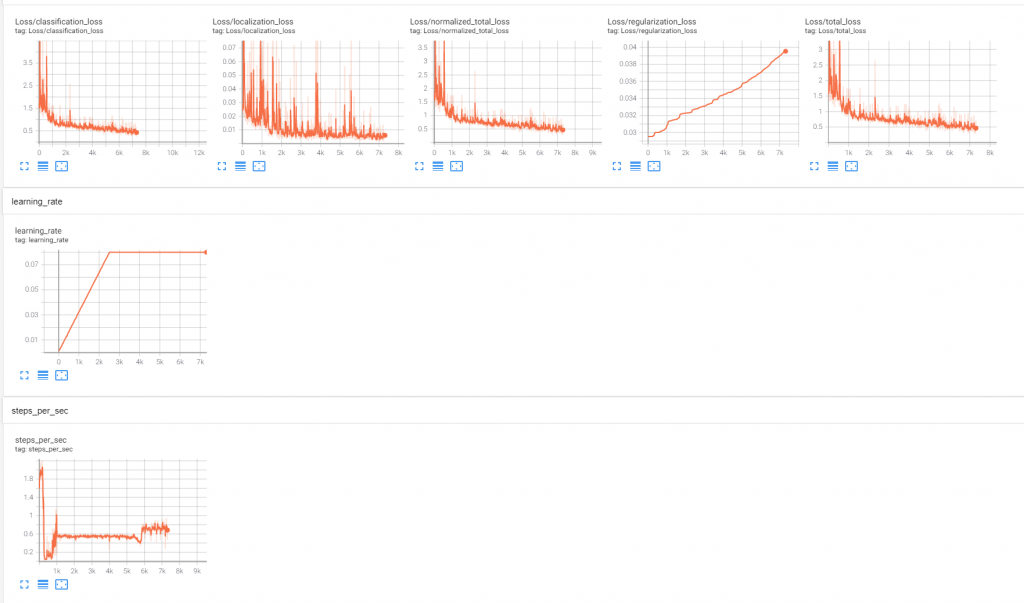

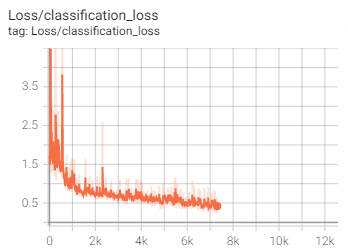

During the training, I can monitor progress via ‘Tensorboard’, which looks a bit like this:

I’m still figuring out what the significance of each of these measures is, but generally I think I want loss to decrease, and certainly to be well below 1.

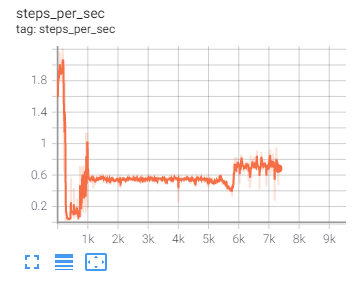

An interesting thing I noticed was that the processing speed seems to vary a lot during run, and particularly drops off a cliff between steps 200 and 300

I’m not sure why this is happening, but it’s happened on a couple of runs, and when the speed increases again at around step 600, the loss becomes much less noisy

There seems to be a gradual improvement across 1k+ steps, but I’m not sure if I should be running this for 5k or 50k. I also suspect that my very small number of training images means that the improvement will turn into overfitting quite quickly.

Step 4a: The first attempt

I was so surprised the first attempt even worked, that I didn’t bother to save any screenshots of the progress, only the one image I gleefully sent on whatsapp

At this stage, I realised that getting this to work well would be more complicated, so I reduced the complexity of the problem by ignoring the character’s level

The characters do change appearance as they level up, but they’re similar enough that I think it’s better to build the model on fewer, more general cases.

Step 4b: The outcome

Success! A box, drawn correctly, and a name guessed which is correct!

Ok, so that first one might be a bit too good to be true, but I’m really happy with the results overall. Most of the characters are predicted correctly, and I can see where it might be getting thrown by some of the graphical similarities

Here, for example, the bottom character is correctly labeled as Nidalee, but the one above (Shyvana) has a very similar pose, so I can imagine how the mistake was made

And here, the golden objects on the side of the screen could be mistaken for Wukong.. if you squint.. a lot

Next steps

I’m really pleased with the results from just a few hours of tinkering, so I’m going to keep going and see if I can improve the model, next steps:

- More training data

- Try modifying the existing images (e.g. removing colour) to speed up processing

- Look at some different model types

- Try to get this working in real-time

Most of the imagery on this page is of characters who belong to Riot, who make awesome games

<See part 2 here>

Pingback: Using Object Detection to get an edge in TFT [pt2] | Alex's Blog